Building a Marketing Data Platform: Architecture, Tools, and Best Practices

A Marketing Data Platform brings together every signal your brand captures—from ad impressions and web events to CRM interactions and product usage—so you can measure performance, activate audiences, and drive profitable growth with confidence.

While data warehouses and customer data platforms have existed for years, the Marketing Data Platform (MDP) focuses specifically on unifying marketing-relevant data and making it usable for analytics and activation. For a helpful outside perspective, you can browse this concise overview of marketing data platforms to see how the term is evolving and where it overlaps with adjacent categories.

In practical terms, an MDP standardizes how campaigns, audiences, spend, and outcomes are modeled, so that your organization can answer both strategic and tactical questions quickly. It becomes the source of truth for budget allocation, channel mix, incrementality testing, and lifecycle personalization—without requiring every team to wrangle raw logs or inconsistent dashboards.

As you plan your build, remember that the marketing landscape is changing under our feet. New privacy rules, the fading of third-party cookies, and AI-native tools are reshaping how data is collected and activated. For a future-facing lens, see this thought piece on the future of marketing intelligence and AI-ready growth engines—it provides a useful backdrop for designing systems that won’t be obsolete next year.

What is a Marketing Data Platform?

An MDP is the set of processes, models, and services that consolidate all marketing-relevant data into a governed, queryable, and activation-ready foundation. It is not merely a tool; it is an operational capability built on top of modern data infrastructure. The Marketing Data Platform sits between raw data producers (ad platforms, web and mobile trackers, email service providers, CRMs, and product analytics) and downstream consumers (analysts, data science, marketing automation, experimentation, and BI).

Compared with a generic enterprise data platform, the MDP codifies a domain model for marketing—think campaigns, channels, ad groups, creatives, offers, audiences, conversions, and lifetime value—and applies consistent attribution rules, spend normalizations, and identity stitching. This domain-centric approach reduces time-to-insight and enables repeatable, trustworthy reporting.

Core components of an MDP

1) Data ingestion and connectors

Bring data in from ad APIs, CRM exports, web and app event streams, and third-party measurement tools. Prefer managed ELT connectors for reliability, but ensure you can fall back to custom ingestion when APIs change or quotas bite. Capture both batch loads (daily spend summaries) and streaming events (real-time conversion pings) to balance freshness and cost.

2) Identity resolution

Marketing answers often depend on joining anonymous web activity to known customer profiles. An MDP should support multiple identifiers (hashed emails, device IDs, login IDs) and deterministic rules for stitching. Where policy allows, probabilistic methods can fill gaps, but always tag match confidence so analysts can filter and validate.

3) Data modeling and transformation

Use versioned, testable SQL or code-based transformations to build conformed dimensions and facts: campaign, channel, creative, audience, spend, conversions, revenue, and LTV. Apply business logic for attribution windows, deduplication, and exchange-rate normalization. Keep lineage visible so marketers can trace a metric to the underlying sources.

4) Storage and performance

Most modern MDPs anchor on a scalable cloud data warehouse or lakehouse. Partition large tables by date and campaign metadata, and create aggregate tables for common queries. Use materialized views for frequently accessed KPI slices, and consider query acceleration features to keep dashboard latency under a few seconds.

5) Activation and reverse ETL

Insights must move into action. A reverse ETL layer publishes modeled audiences and attributes back into ad platforms, email tools, and personalization systems. Build reusable audience definitions (e.g., “High-propensity repeat buyers”) with governance guardrails and audit logs so you can prove what was sent, when, and why.

6) Observability and cost control

Track freshness, row counts, schema changes, failed loads, and unit costs per table and per connector. Instrument dashboards for data reliability SLAs (e.g., “yesterday’s spend loaded by 8am local”). A few alerting rules can save hours of triage time and keep teams trusting the numbers.

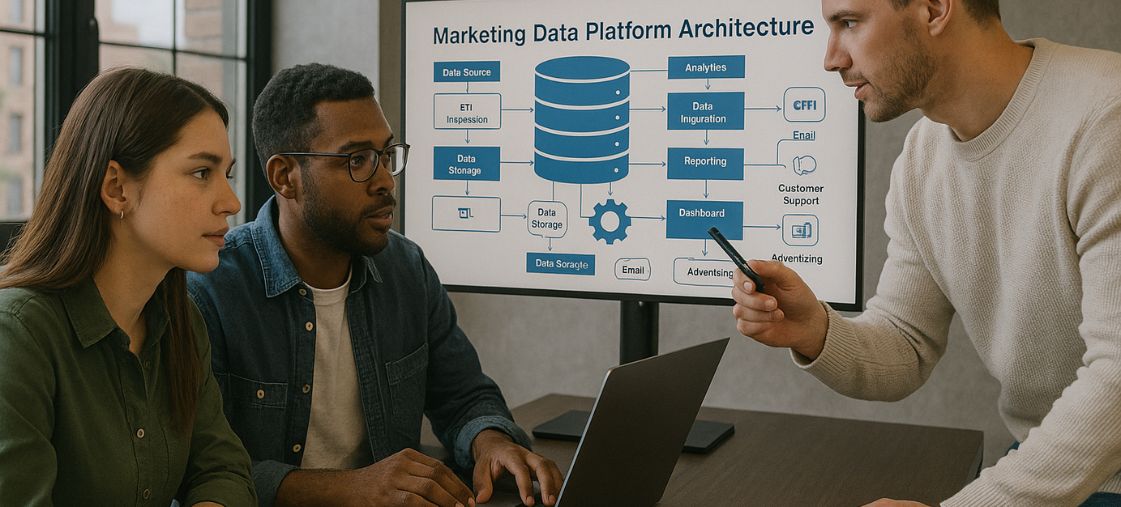

Reference architecture

An effective Marketing Data Platform generally follows a layered architecture:

- Sources: Ad platforms (Google, Meta, TikTok, programmatic), web/app events, CRM, email/SMS, affiliate networks, surveys, and offline media trackers.

- Ingestion: Managed ELT (e.g., vendor connectors) plus custom pipelines for niche sources; streaming where needed.

- Storage: Cloud data warehouse or lakehouse as the central store.

- Transform: Versioned modeling with CI tests; semantic layer defines metrics and dimensions.

- Serve: BI dashboards, notebooks, experimentation tools, and reverse ETL for activation.

- Govern: Access control, data contracts, privacy enforcement, and cost governance.

Batch vs. streaming is usually a business decision. If campaigns are optimized daily, batch is often sufficient and cheaper. If your growth team needs sub-hour feedback loops (e.g., suppressing churn-risk users from paid retargeting), stream critical events and aggregate them quickly into activation-ready features.

Build vs. buy

Most organizations land on a hybrid approach. Buy managed ingestion to reduce maintenance toil. Build the domain model and metric logic that encode your unique view of growth. Buy reverse ETL and governance to accelerate time to value. Build the parts that differentiate your decision-making—such as your LTV model, incrementality framework, or custom attribution rules.

Evaluate vendors on connector coverage, reliability SLAs, transparency of schemas, cost predictability, and the ability to export your data without lock-in. Internally, ensure you have enough engineering capacity to maintain tests, lineage, and semantic definitions. A vendor can provide speed, but you still own the definitions of “truth.”

Suggested tooling stack

There is no single correct stack, but a representative setup might include:

- Warehouse/Lakehouse: Scalable cloud platform with strong SQL support and fine-grained permissions.

- ELT: Managed connectors for ad platforms, CRM, and analytics; fallback scripts for edge sources.

- Transformation: Versioned SQL models with tests, documentation, and CI/CD.

- Semantic layer: Central metrics with governed definitions (e.g., spend, CAC, ROAS, LTV, MTA vs. MMM).

- Reverse ETL: Audience syncs and attribute pushes with monitoring and audit logs.

- BI and notebooks: Dashboards for marketers and exploration for analysts and data scientists.

- Observability: Data quality checks, freshness SLAs, and cost monitoring.

- Privacy/Governance: Consent management, PII tagging, access policies, and key rotation.

Data quality, governance, and privacy

Trust is the product. Implement schema and freshness tests on critical tables—spend, impressions, clicks, sessions, conversions, and revenue. Add business logic checks (e.g., CAC must be non-negative; impression-to-click rates within expected ranges). Document known caveats for every source so stakeholders understand discrepancies (attribution model differences, sampling, anti-fraud filters).

For privacy, tag all columns that can identify a person (PII) and apply masking in lower environments. Enforce least-privilege access: analysts may need aggregates, while activation systems need specific identifiers under strict controls. Keep detailed audit trails for data sent to external platforms.

Implementation roadmap

- Align on outcomes: Define decisions you want to improve (budget allocation, creative testing, lifecycle triggers).

- Inventory sources: List ad platforms, analytics tools, CRM, product events, and offline channels; map identifiers.

- Stand up ingestion: Prioritize connectors for your top-spend channels and most-used CRM systems.

- Model core tables: Campaigns, spend, conversions, and revenue; document attribution windows and business rules.

- Publish a semantic layer: Standardize metric names, time grains, and dimensions for reuse in BI and activation.

- Ship your first dashboards: Channel performance, CAC/ROAS trends, and cohort LTV.

- Add reverse ETL: Launch 1–3 high-impact audience syncs; monitor for drift and suppression accuracy.

- Harden with tests and alerts: Add freshness SLOs, anomaly detection, and cost monitoring.

- Iterate: Expand coverage to long-tail sources, and layer on MMM, incrementality, and causal inference as maturity grows.

KPIs and measurement

Choose a small set of KPIs that roll up to business outcomes. At the platform level, monitor data freshness, job success rates, and connector latency. At the business level, track CAC, ROAS, LTV/CAC ratio, marginal ROAS, payback periods, and incrementality. For audience programs, track precision/recall of targeting and suppression, not just click-through rates. When introducing media mix modeling (MMM), validate its directional guidance against holdout tests to build organizational trust.

Common pitfalls and how to avoid them

- Over-modeling early: Start with the core tables and clear definitions before attempting edge cases.

- Ignoring identity: Without consistent stitching, channel reporting might look fine but lifecycle programs will underperform.

- Under-investing in governance: Lack of access controls and documentation erodes trust and slows adoption.

- Tool sprawl: Too many overlapping tools increase cost and confusion. Consolidate where possible.

- Opaque metrics: If stakeholders can’t trace a metric to its sources and rules, they will create shadow dashboards.

Conclusion

A robust Marketing Data Platform turns fragmented signals into a strategic asset. By combining reliable ingestion, a clear marketing domain model, a governed semantic layer, and activation via reverse ETL, you enable faster decisions and more effective growth loops. As you operationalize your MDP, set up tight feedback cycles between marketing, analytics, and engineering so insights continuously inform creative, bidding, and audience strategy. And when you research competitive landscapes or creative trends, consider complementing your dataset with competitive intelligence tools to inspire testing ideas and benchmark performance.

The payoff is compounding: cleaner data yields better models; better models drive more precise targeting and measurement; and more precise loops accelerate learning. Start small, codify your definitions, and invest in trust. The result is not just a set of dashboards, but a durable capability that makes marketing measurably smarter.